A Brief History of Artificial Intelligence

In the 1930s, an American mathematician roamed the halls of MIT, pondering a question: could logic be expressed mechanically, in a machine? His name was Claude Shannon. In his master’s thesis he connected Boolean algebra—the mathematics of true and false—to the on-off states of electrical circuits. This leap of insight set the stage for the computer revolution: from clacking relays and vacuum tubes to the miniature processors in our pockets, all the way to the neural networks powering ChatGPT.

Boolean algebra was devised by George Boole in the mid-19th century. It’s a mathematical formalization of logic, distilling reasoning into a precise framework of two values—true and false—and operations like AND, OR, and NOT that define how truths combine or invert. Notably a self-taught mathematician, Boole aimed to improve on traditional Aristotelian logic, believing this could uncover deeper insights into the nature of existence.

For decades, Boolean algebra remained mostly a mathematical curiosity. That all changed in 1937. Shannon realized it could be the blueprint for building circuits that “think,” at least in a mechanical sense. By wiring switches—and later transistors—in patterns that mirrored Boolean logic, you could make a machine that performed logical operations. One switch might represent “true” when closed and “false” when open. Connect them in a certain arrangement, and you have a physical realization of a logical statement. For the first time, logic was not just an abstract notion in philosophers’ heads, it could be liberated—deus in machina.

Why binary? Because it’s robust. Electrical signals are easy to distinguish in two states—current flowing or not, high voltage or low. Any complexity—numbers, letters, images—can be encoded in binary form. It’s like a baseline alphabet that everything else reduces to, a lingua franca for everything from your favorite movie streaming on a tablet to the complex calculations behind weather forecasts.

The early manifestations of these systems were enormous and fragile, relying on vacuum tubes or relays that could fail at any moment. Worse still, programming these machines was a physical, mechanical ordeal—every new task required rewiring the machine itself or feeding it specially punched cards, making the process slow, inflexible, and prone to error. As these primitive machines demonstrated their potential, a crucial question emerged: how could we make instructing these machines more flexible and powerful?

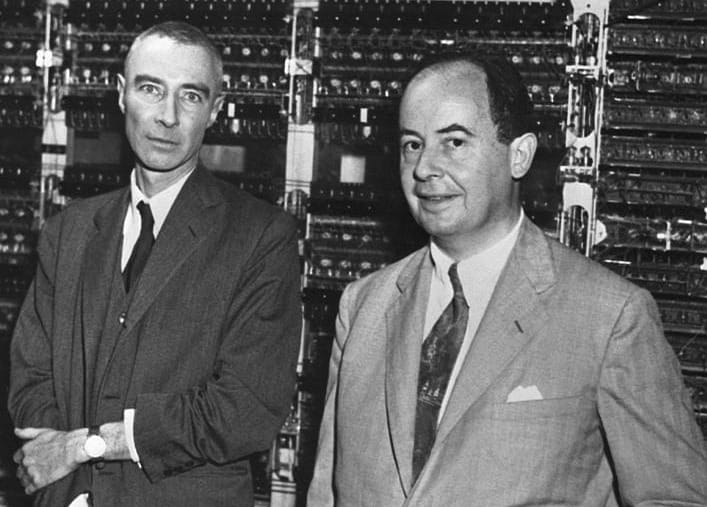

This leads us to John von Neumann, one of the 20th century’s great polymaths. His crucial insight was that instructions themselves could be stored in the machine’s memory, right alongside the data those instructions manipulate. This seems obvious now, but at the time, it was revolutionary. By making instructions just another form of data in memory, we gained the ability to change what a computer does simply by uploading new information. The same hardware could run a payroll system one moment and perform orbital calculations the next. The computer finally became a universal machine and software was born.

The von Neumann architecture laid the foundation for a scaffolding of abstractions that allowed computers to grow in complexity and versatility. At its core is the translation from simple layers to more complex ones. The base layer is binary, which translates into machine instructions and then compiles into higher-level code that human programmers can use. Over time, these layers of abstraction expanded to include systems like graphical user interfaces, where a simple click triggers thousands of hidden actions, all ultimately translating back to binary logic. The marriage between hardware and software sparked a relentless pursuit of faster chips and more capable programs, driving innovation to tackle increasingly ambitious problems.

For most, the focus of software was practical applications—spreadsheets, databases, writing tools, and the like. But a small group of researchers remained captivated by the idea of machines that could think. Just as airplanes were inspired by birds and sonar by bats, they looked to the human brain as inspiration. Alan Turing, believing that human thought could be reduced to logical processes and computation, introduced the Imitation Game in the 1950s—a test to determine whether a machine’s responses could be indistinguishable from a human’s.

A few years later, in the summer of 1956, a group of ambitious researchers convened at Dartmouth College in New Hampshire, determined to chart a course toward “artificial intelligence.” John McCarthy, Marvin Minsky, Claude Shannon, and Herbert Simon were among them. They were buoyed by the recent triumphs of digital computing and believed that human intelligence—a complex but ultimately systematic process—could be re-created in a machine. The Dartmouth Conference is often cited as AI’s founding moment, the point where vague dreams hardened into a research agenda.

Early efforts focused on symbolic AI: representing knowledge as symbols and strings of logic, then manipulating them according to carefully coded rules. The logic was explicit and human-readable. For example, you might tell a program: “All men are mortal. Socrates is a man. Therefore, Socrates is mortal.” From these building blocks, researchers hoped to scale up to more complex domains—chess, natural language understanding, even something approaching common sense. For a time, progress looked promising. Programs solved algebra problems, proved theorems, and manipulated symbols in ways that felt eerily like reasoning.

But ultimately, this was a false dawn. Reality was rarely neatly understood. The more complex the domain—recognizing a face, parsing the nuance in language, navigating an unpredictable environment—the harder it became to craft rules that accounted for every possible variation. Real life resisted symbolic codification. Researchers discovered that much of human knowledge is implicit, approximate, and contextual. Progress slowed, funding dried up, and by the 1970s the field entered what became known as the “AI Winter.”

While symbolic AI struggled to scale, another idea was surfacing: perhaps intelligence wasn’t about carefully enumerated rules. Perhaps it was about learning patterns directly from experience. Even before Dartmouth, scientists like Warren McCulloch and Walter Pitts had posited that neurons could be modeled as simple binary units, and that networks of such units might exhibit brain-like computation.

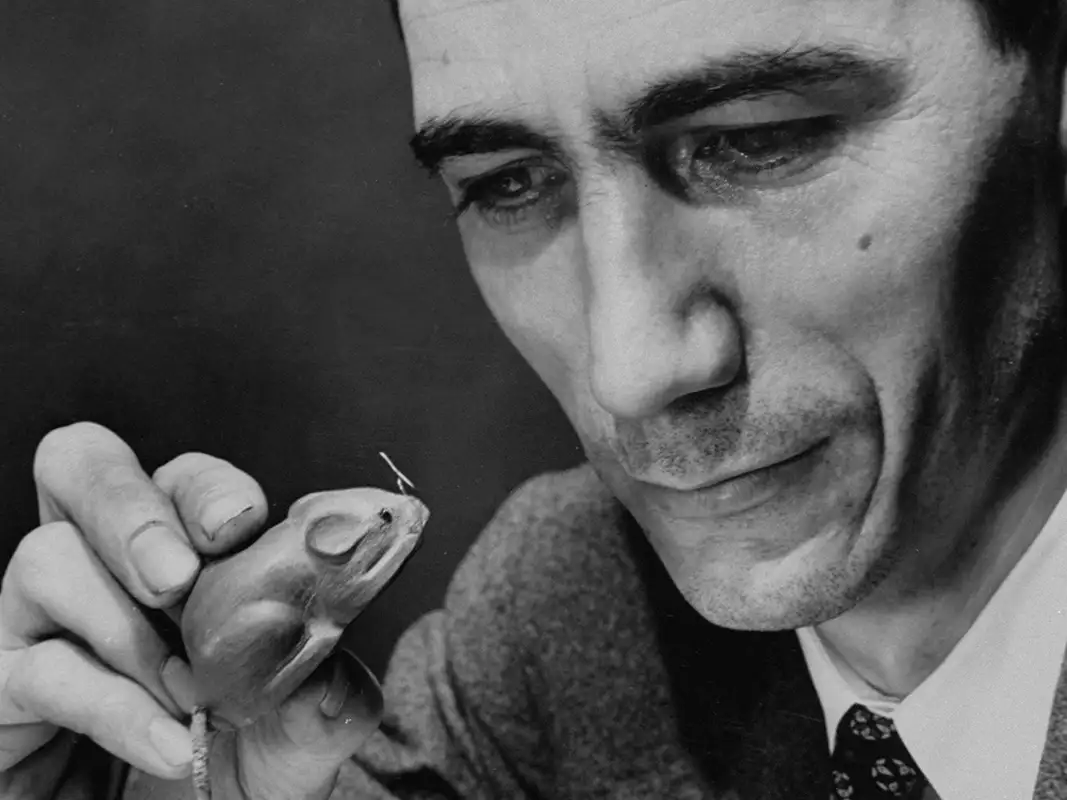

Inspired by the brain’s architecture, Frank Rosenblatt introduced the perceptron in 1958—a simple model that could learn to classify patterns, like basic shapes, by adjusting its internal parameters, known as weights. Though the perceptron was relatively crude, it foreshadowed the critical role parameters would play in modern AI systems. By breaking down complex features of the world into precise, granular elements, parameters enable models to capture patterns, relationships, and nuances essential for learning and decision-making.

But the perceptron could only handle so much complexity. In 1969, Marvin Minsky and Seymour Papert published a detailed critique showing the perceptron’s limitations, casting doubt on the whole neural-network approach. The field largely abandoned neural nets. Yet a handful of researchers kept the faith, believing that with more complex architectures and better training methods, neural networks might yet deliver on their promise. They needed a way to train networks with multiple layers—layers that could extract ever more abstract features from raw data.

This breakthrough emerged in the 1980s with the invention (or rediscovery) of backpropagation. David Rumelhart, Geoffrey Hinton, and Ronald Williams showed how to use clever math to get the parameters to automatically adjust to errors. This allowed for “deep” networks—stacks of layers—that could, in principle, learn more complicated patterns. Neural networks began to take on problems that symbolic AI struggled with: pattern recognition, speech processing, and rudimentary vision tasks. They weren’t mainstream yet, but the seed was planted.

As the millennium turned, neural networks had fallen out of fashion. The approach demanded massive computing power and data–scarce resources in an era when Silicon Valley's attention and money were flowing to dot-com startups. Most labs turned to simpler statistical methods that could run on modest hardware, finding success with basic tasks like speech recognition. But neural networks simmered in the background, waiting for their moment.

That moment arrived in the early 2010s, thanks to three converging factors. First was the explosion of internet content: billions of photos, videos, and blogposts flooding platforms like Facebook, YouTube, and WordPress created a vast ocean of training data. Second, hardware—specifically graphics processors originally designed for video games—offered massive parallel processing power, making it feasible to train large neural networks quickly. Third, conceptual refinements and tricks of the trade made training deep networks more stable.

The turning point often cited is the 2012 ImageNet competition, a challenge where algorithms competed to classify objects in photographs. A deep neural network designed by Alex Krizhevsky, working with Ilya Sutskever and Geoffrey Hinton, decisively outperformed all other methods. Dubbed AlexNet, this model ignited the deep-learning revolution. Suddenly, neural networks were not just competitive—they were dominant. Vision tasks, speech recognition, and language modeling all saw dramatic improvements as researchers piled more layers onto their networks and trained on larger datasets.

Tech companies took notice. Google, Facebook, and others poured resources into deep learning and AI generally. While deep neural networks revolutionized pattern recognition, another frontier of AI started tackling decision-making. Enter reinforcement learning, a method where machines learn optimal strategies by trial and error, guided by rewards and penalties. Reinforcement learning captured the public imagination in 2016 with AlphaGo, developed by DeepMind. AlphaGo stunned the world by defeating the reigning human champion of the ancient board game Go—a game so complex it was thought to rely on uniquely human intuition. It marked a shift in AI’s potential, demonstrating that machines could excel in realms requiring long-term planning and adaptability.

While AI was mastering games and pattern recognition, language remained a stubborn challenge. Early translation systems faced a fundamental issue: they had to compress entire sentences into a single string of numbers before processing them–losing important nuance. In 2014, a graduate student named Dzmitry Bahdanau had a breakthrough while interning at Yoshua Bengio's lab. Drawing on his experience learning English in school, he developed a system that could selectively access different parts of the original sentence while translating, more like how a human translator might work. This "attention" mechanism proved to be consequential and immediately improved results.

The big milestone came in 2017 with the Transformer architecture, introduced by a team at Google in a paper titled "Attention Is All You Need." While humans naturally build a mental model as we read, holding the meaning in working memory, AI systems had no equivalent ability. Transformers changed this by expanding Bahdanau's attention concept; they could process an entire passage simultaneously. This gave AI something like our working memory, an ability to see the full context at once.

OpenAI’s Chief Scientist, Ilya Sutskever (of AlexNet fame), appeared to understand the implications immediately. Alec Radford, already building a reputation as a legendary programmer, had been experimenting with early chatbot models. In one notable project, he trained a system on Amazon reviews, which unexpectedly developed state-of-the-art sentiment analysis capabilities. This knack for coaxing emergent behaviors from language models would help prove Sutskever right. Their first GPT model showed that transformers, when pre-trained on vast amounts of text and fine-tuned for specific tasks, could demonstrate surprising capabilities.

The team's conviction grew with each experiment, culminating in what they dubbed: scaling laws. As they pushed the boundaries of model size and training data, new abilities emerged that seemed to transcend the original architecture. The machines weren't just processing language anymore—they were beginning to show signs of understanding it. They learned to complete code, display creativity, and even proto-reasoning—capabilities that emerged naturally from architecture and scale. Vision, audio, and multimodal systems all began to benefit from this approach. AI found its blueprint.

OpenAI's trajectory would prove transformative. What began as a non-profit research lab meant to serve as a bulwark against Google, would become the epicenter of true artificial intelligence. The early GPT models were promising but only of use to the research community. Each iteration revealed new capabilities. GPT-2 showed surprising fluency in generating human-like text. GPT-3 demonstrated emergent abilities that startled even its creators.

But it was ChatGPT (GPT 3.5) that captured the world's imagination. Released in late 2022, it made AI accessible to everyone, from children asking homework questions to professionals seeking creative solutions. The interface was simple, but behind it lay a profound achievement: a system that could engage in open-ended dialogue, reason about complex topics, and adapt its responses to context. A computer could understand us for the first time.

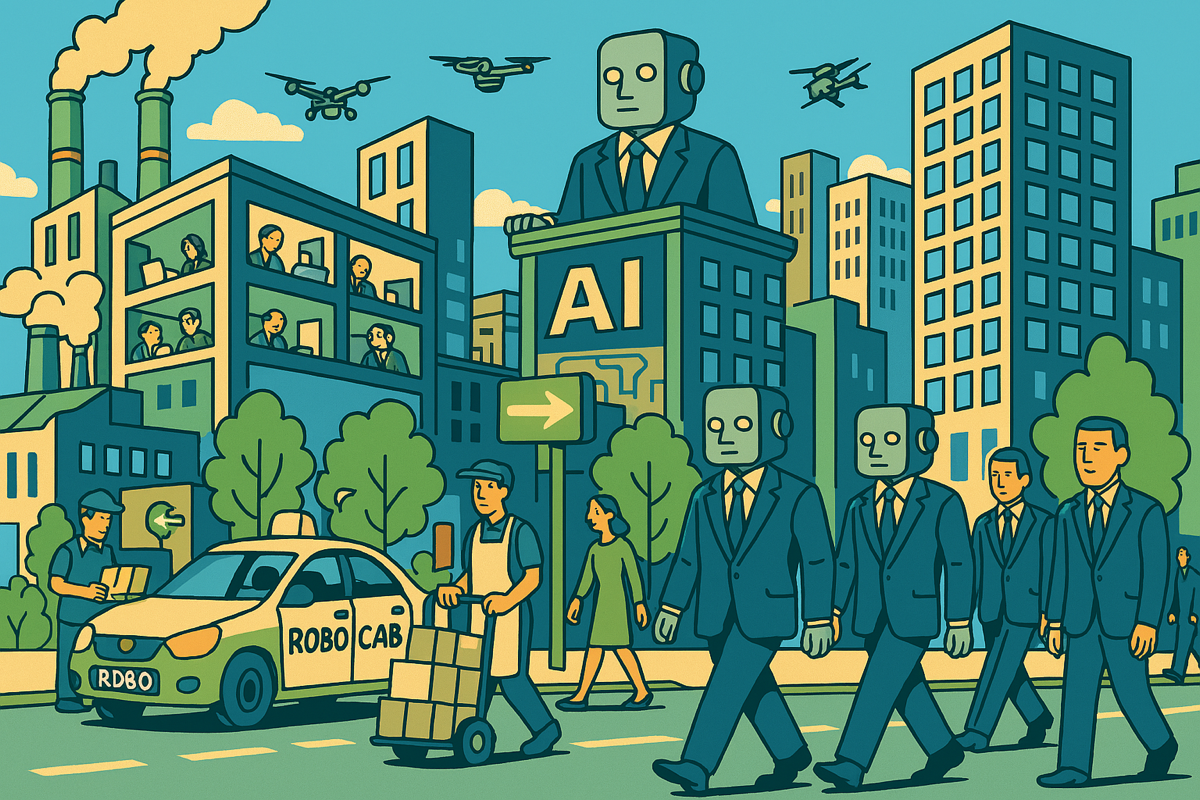

Stepping back, there is an eerie inevitability to this story. I don't believe this is a story about the genius of Claude Shannon or the engineering prowess of Alex Radford. These leaps of thought were inspired, but no singular genius was required. The economic and status incentives aligned behind each obstacle like water behind a dam, the flow destined to break through.

Underlying all of this striving is a collective dream: to be unburdened. We seek a helping hand in a harsh universe. Even a genie to solve our most impossible problems. As Shannon liberated primitive logic, we hope to liberate intelligence. And we hope it will serve us well.

In 2014, one of the founders of OpenAI, Elon Musk, publicly expressed some concerns:

With artificial intelligence, we are summoning the demon. You know all those stories where there's the guy with the pentagram and the holy water, and he's like, yeah, he's sure he can control the demon? Doesn't work out.

The same man is now scaling the largest training clusters on earth, desperate to win the race to AGI. Perhaps his change of heart reflects new confidence in AI safety. Or perhaps he surrendered to momentum; demonstrating the gravitational pull of progress.

The computer revolution has been astonishing. But to live through the birth of AI is to experience a peculiar kind of vertigo. There is much to celebrate but there is also much to dread. Something fundamental may be unfolding in our corner of the universe, driven by mundane yet inexorable forces. We don’t know where we’re going or what will happen when we get there. No one chose this path. No government decreed it. No oligarch or corporation willed it to be. Our society is less steered than ridden. We are all passengers watching the horizon bend.