Charles Darwin: The Godfather of Reinforcement Learning

Did Charles Darwin, in explaining the origins of species, also articulate the foundational logic of reinforcement learning?

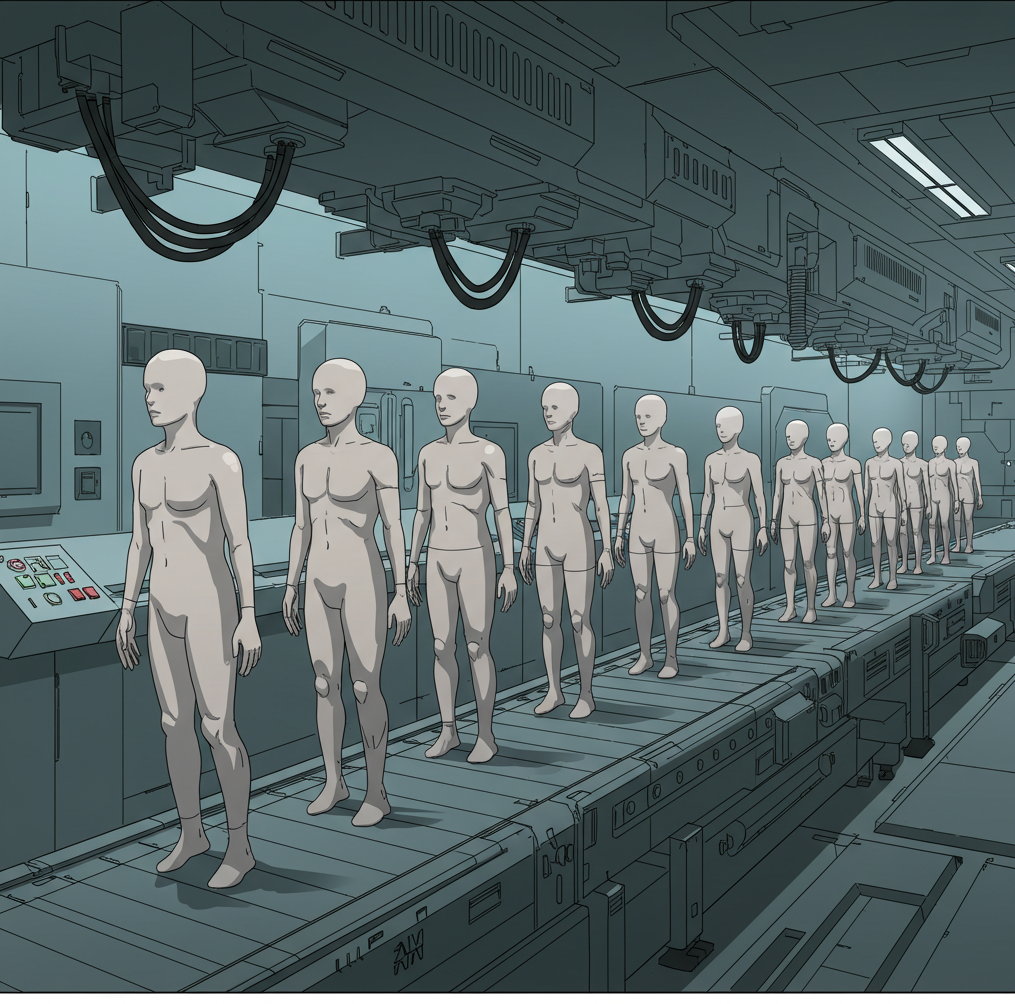

Consider the mechanics. Reinforcement learning involves an agent exploring an environment, taking actions, and adjusting its behavior based on rewards or penalties. Its goal is to discover a policy—a strategy—that maximizes cumulative rewards over time. To do this, it must balance exploration (testing novel actions) with exploitation (employing successes). Now map this onto Darwin's theory: Random mutations and genetic recombination generate variation (exploration). Organisms with traits that enhance survival and reproduction thrive (exploitation), passing those traits to offspring. Over generations, populations accumulate adaptive "policies" for their environments. The two systems differ in substrate and timescale, but their algorithmic skeletons align. Both are optimization processes that navigate uncertainty through iterative trial-and-error, feedback, and incremental refinement.

This alignment isn't superficial. In reinforcement learning, the agent's behavior is shaped by a reward function—an explicit signal that quantifies success. In evolution, the reward function is implicit but operates through multiple, interacting mechanisms. Consider how a cheetah's hunting success affects its survival: immediate caloric rewards combine with longer-term effects on reproductive fitness, creating a complex reward landscape. This multi-layered feedback system shapes not just individual traits but entire developmental programs. The way a cheetah's muscles and skeletal structure develop during growth, its hunting instincts, and even its social behaviors all emerge from this implicit reward structure. Darwin's great insight was that nature's apparent design emerges without a designer, just as reinforcement learners achieve complex goals without preprogrammed instructions. Both are examples of what the computer scientist Judea Pearl calls "ladderless learning"—systems that climb the ladder of complexity without a prior blueprint.

Skeptics will rightly point to distinctions. Evolution works across generations, while RL agents adapt within their lifetimes. Natural selection lacks centralized agency; there's no "learner" directing the process, only differential survival. But these differences mask a deeper unity. Evolution can be framed as a population-level reinforcement learner where each generation represents an update to the policy. The "agent" is the gene pool, the "actions" are genetic variations, and the "environment" is the ecosystem dispensing rewards via survival. Recent AI research even borrows this logic: Evolutionary algorithms, which optimize neural networks by mimicking natural selection, treat entire populations of models as exploratory cohorts, culling low performers and rewarding high ones. Here, Darwin's process isn't just analogous—it's operationalized as code.

The inverse is equally revealing. Modern evolutionary biologists increasingly describe natural selection in terms of information processing. In a landmark paper, "The Algorithm of Natural Selection" (Nature, 2019), researchers demonstrated how genetic regulatory networks perform computations analogous to backpropagation in neural networks. They showed how mutation rates in bacteria dynamically adjust based on environmental stress—a striking parallel to how RL agents modulate their exploration rates. Other studies have mapped how gene expression patterns optimize over time using mathematics eerily similar to temporal difference learning algorithms. Genetic variation acts as a search algorithm, probing the space of possible traits; environmental pressures compute fitness scores; inheritance propagates successful "solutions."

This reframing has practical implications for AI development. The study of evolutionary strategies like bet-hedging—where organisms maintain diverse traits as insurance against environmental change—has inspired more robust RL algorithms. Natural selection's success in generating general-purpose solutions suggests ways to avoid overfitting in artificial systems. Even the challenge of reward specification in RL might benefit from studying how biological reward systems balance competing pressures across different timescales.

What emerges from this synthesis is a unifying view of learning itself. Darwin showed that nature "learns" through trial, error, and reward long before the concept was formalized in psychology or computer science. His work hints that learning is not merely something minds do but a fundamental property of complex systems interacting with their environments. This perspective dissolves the boundary between the born and the built. A bird's beak fine-tuned over millennia and a robot's gait optimized in simulation are both products of the same basic algorithm—explore, evaluate, exploit.

Darwin deserves recognition not just as a biologist but as one of computing's earliest algorithmic thinkers. He discovered what may be the most fundamental algorithm of all—a set of rules that, when followed iteratively, produce adaptive intelligence from chaos. Evolution is Earth's original learning system, running for billions of years before we built our first computer. Modern reinforcement learning, with its explore-exploit tradeoffs and fitness functions, isn't just inspired by natural selection—it's a formal implementation of Darwin's core insights. Understanding this lineage isn't merely an intellectual curiosity; it reframes the history of computer science itself, placing Darwin's algorithmic breakthrough alongside other pivotal discoveries in how information can be processed and intelligence can emerge.