DeepSeek Foreshadows Recursive Self-Improvement

DeepSeek's recent achievement with their R1 model should serve as a stark warning—one that leaders at all levels of society would do well to heed.

I.J. Good spent World War II in Bletchley Park working under Alan Turing on the German Enigma codes. By 1965, Good made a simple observation that haunts us to this day: an ultraintelligent machine could design better machines, which could in turn design still better machines, ad infinitum. This loop of self-improvement meant an intelligence explosion was inevitable once begun. Good writes:

Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an "intelligence explosion," and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control.

DeepSeek just demonstrated how a small talented team with a couple of clever insights can achieve a significant, unexpected jump in capabilities. If a team of human researchers can achieve such dramatic performance improvements, what might an AI system be able to accomplish engineering at digital speeds and capable of exploring vast solution space?

The race for AGI is intensifying. Frontier labs like OpenAI face mounting demands to accelerate progress while managing costs. Using AI to assist in its own development—first for testing, then for debugging, soon for architecture design—becomes not just sensible but necessary to stay competitive. What begins as practical cost-cutting quietly evolves into something profound: AI systems helping to create better AI systems. The theoretical feedback loop that Good described is emerging not through choice, but through market forces and engineering sprints. Each small step toward automation feels natural in isolation, even as they sum to something that would have seemed like science fiction just years ago.

The reality is that we may be drastically underestimating both the speed at which powerful capabilities could emerge and the diversity of design paths which may produce them. We are likely to face multiple sudden, discontinuous jumps in capability in the coming years. Once AI begins doing the pioneering research, it might discover far better use of all this computing infrastructure we have built up with potentially novel architectural designs, leading to profound and destabilizing advances.

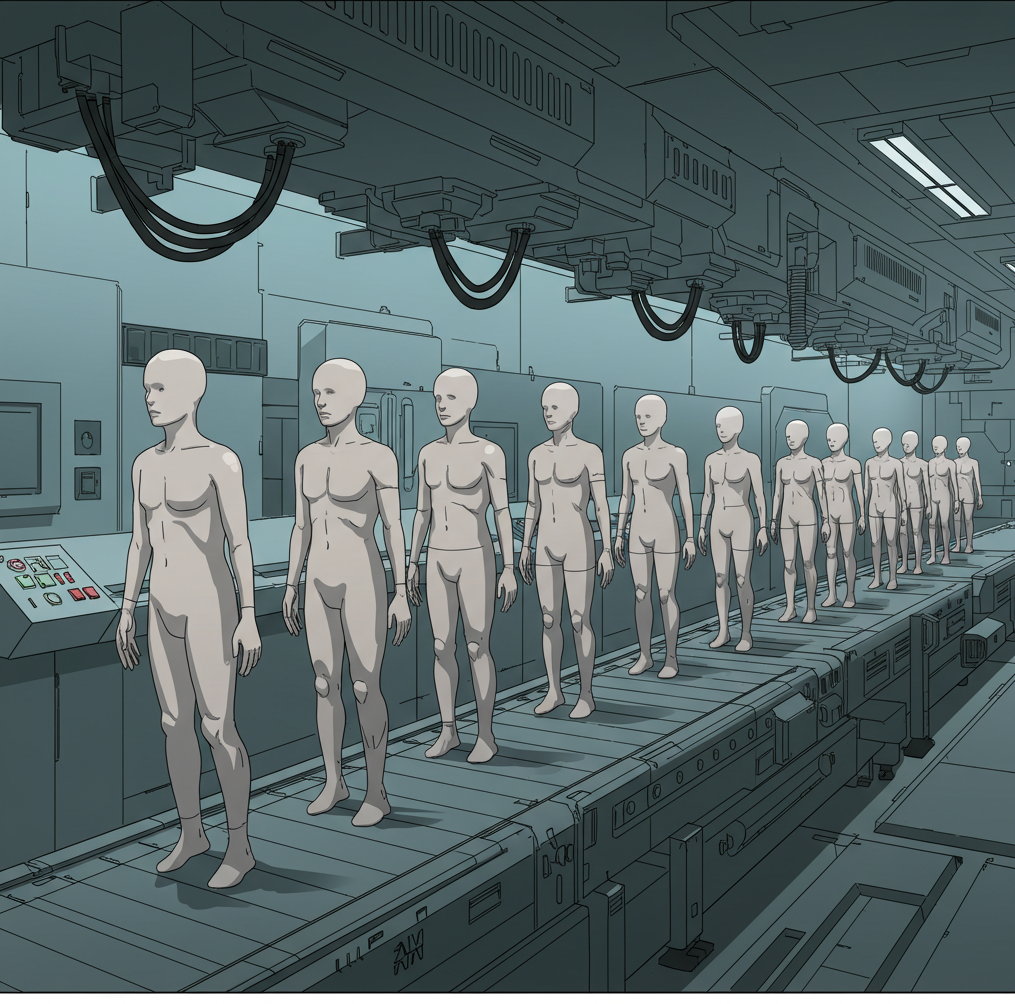

The implications become even more stark when we consider the potential for multiple AI systems working in parallel. What might a swarm of interconnected AI agents, each specializing in different aspects of AI development and working in concert, be capable of? Each agent could focus on optimizing different aspects of the system—some working on architectural improvements, others on training efficiency, others on novel learning approaches—creating a feedback loop of accelerating improvement.

This scenario exposes a critical weakness in our current approach to AI safety. We've been operating under the assumption that we'll have time to react, to put safeguards in place as AI capabilities gradually improve. DeepSeek's breakthrough suggests this assumption may be fatally flawed. This is not to suggest that all is lost or that panic is warranted. Rather, it's a call for a fundamental reassessment of our approach to AI safety. We need frameworks that are actively expecting rapid discontinuous advances. It's a sort've all hands on deck moment. Calibrate your emotions to the facts. If you're in a position to contribute to this effort, now would be a good time to enter the fray.

To provide a little outside view context, read this 1993 passage from the science fiction author Vernor Vinge speculating about the technological singularity:

But it's much more likely that devising the software will be a tricky process, involving lots of false starts and experimentation. If so, then the arrival of self-aware machines will not happen till after the development of hardware that is substantially more powerful than humans' natural equipment.)

...And what of the arrival of the Singularity itself? What can be said of its actual appearance? Since it involves an intellectual runaway, it will probably occur faster than any technical revolution seen so far. The precipitating event will likely be unexpected – perhaps even to the researchers involved. ("But all our previous models were catatonic! We were just tweaking some parameters .... ") If networking is widespread enough (into ubiquitous embedded systems), it may seem as if our artifacts as a whole had suddenly wakened.

And what happens a month or two (or a day or two) after that? I have only analogies to point to: The rise of humankind. We will be in the Post-Human era. And for all my rampant technological optimism, sometimes I think I'd be more comfortable if I were regarding these transcendental events from one thousand years remove ... instead of twenty.

The DeepSeek breakthrough should serve as a warning shot. Not from the Chinese, but from the coming phase-change in AI development to recursive self-improvement. The time to address these challenges is now, while we still have the luxury of deliberation. The next breakthrough might not come with a public announcement and academic paper. It might instead manifest as a sudden shift in capabilities that we're ill-prepared to handle. The first step in keeping ourselves safe is deep realism about this issue—assume it will be the case! It's time to abandon comfortable assumptions about the predictability of AI progress and embrace a more nimble, adaptive approach to ensuring that artificial intelligence doesn't lead to a disastrous cascade of events.