The Origin of the Attention Mechanism in LLMs

There has been some recent discussion on X about the origin of Transformers—specifically the attention mechanism—sparked by this post:

Wow! "Attention is All You Need" (i.e., Transformers) was inspired by the Alien's communication style in the movie Arrival. pic.twitter.com/7hYRkhOF95

— Carlos E. Perez (@IntuitMachine) December 1, 2024

Founding member of OpenAI, Andrej Karpathy, felt this was contrary to his recollection, so he did us all a favor and contacted the relevant party to clarify the history. This is a seminal moment in modern AI, so I transcribed the email and provided some follow-up commentary below.

The (true) story of development and inspiration behind the "attention" operator, the one in "Attention is All you Need" that introduced the Transformer. From personal email correspondence with the author @DBahdanau ~2 years ago, published here and now (with permission) following… pic.twitter.com/hKD7gDcexS

— Andrej Karpathy (@karpathy) December 3, 2024

Here’s the transcription of the text in the image:

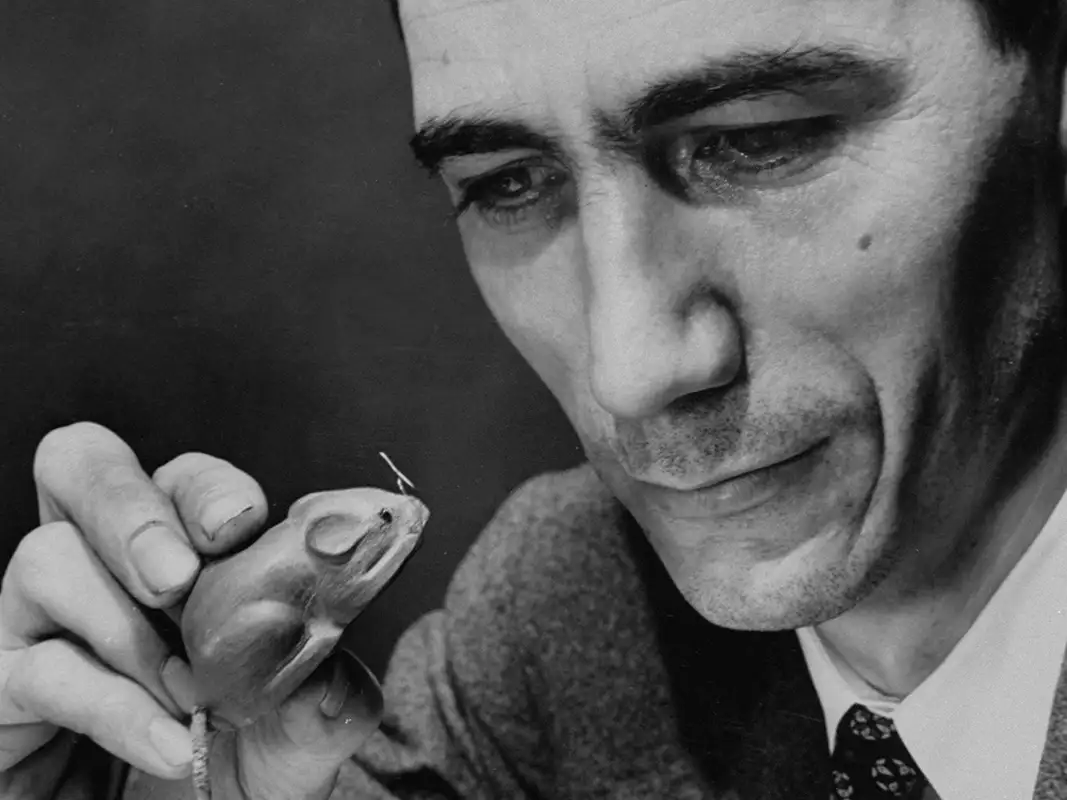

Dzmitry Bahdanau to Andrej

Hi Andrej,

Happy to tell you the story as it happened 8 years ago!

I came to Yoshua’s lab as an intern, after having done my first year of MSc at Jacobs University with Herbert Jaeger.

I told Yoshua I’m happy to work on anything. Yoshua put me on the machine translation project to work with Kyunghyun Cho and the team. I was super skeptical about the idea of cramming a sequence of words in a vector. But I also really wanted a PhD offer. So I rolled up my sleeves and started doing what I was good at – writing code, fixing bugs and so on. At some point I showed enough understanding of what’s going on that Yoshua invited me to do a PhD (2014 was a good time when that was enough – good old times!). I was very happy and I thought it’s time to have fun and be creative.

So I started thinking about how to avoid the bottleneck between encoder and decoder RNN. My first idea was to have a model with two “cursors”, one moving through the source sequence (encoded by a BiRNN) and another one moving through the target sequence. The cursor trajectories would be marginalized out using dynamic programming. Kyunghyun Cho recognized this as an equivalent to Alex Graves’ RNN Transducer model. Following that, I may have also read Graves’ handwriting recognition paper. The approach looked inappropriate for machine translation though.

The above approach with cursors would be too hard to implement in the remaining 5 weeks of my internship. So I tried instead something simpler – two cursors moving at the same time synchronously (effectively hard-coded diagonal attention). That sort of worked, but the approach lacked elegance.

So one day I had this thought that it would be nice to enable the decoder RNN to learn to search where to put the cursor in the source sequence. This was sort of inspired by translation exercises that learning English in my middle school involved. Your gaze shifts back and forth between source and target sequence as you translate. I expressed the soft search as softmax and then weighted averaging of BiRNN states. It worked great from the very first try to my great excitement. I called the architecture RNNSearch, and we rushed to publish an ArXiv paper as we knew that Ilya and co at Google are somewhat ahead of us with their giant 8 GPU LSTM model (RNN Search still ran on 1 GPU).

As I later turned out, the name was not great. The better name (attention) was only added by Yoshua to the conclusion in one of the final passes.

We saw Alex Graves’ NMT paper 1.5 months later. It was indeed exactly the same idea, though he arrived at it with a completely different motivation. In our case, necessity was the mother of invention. In his case it was the ambition to bridge neural and symbolic AI, I guess? Jason Weston’s and co Memory Networks paper also featured a similar mechanism.

I did not have the foresight to think that attention can be used at a lower level, as the core operation in representation learning. But when I saw the Transformer paper, I immediately declared to labmates that RNNs are dead.

To go back to your original question: the invention of “differentiable and data-dependent weighted average” in Yoshua’s lab in Montreal was independent from Neural Turing Machines, Memory Networks, as well as some relevant cog-sci papers from the 90s (or even 70s; can give you any links though). It was the result of Yoshua’s leadership in pushing the lab to be ambitious, Kyunghyun Cho’s great skills at running a big machine translation project staffed with junior PhD students and interns, and lastly, my own creativity and coding skills that had been honed in years of competitive programming. But I don’t think that this idea would wait for any more time before being discovered. Even if myself, Alex Graves and other characters in this story did not do deep learning at that time, attention is just the natural way to do flexible spatial connectivity in deep learning. It is a nearly obvious idea that was waiting for GPUs to be fast enough to make people motivated and take deep learning research seriously. Ever since I realized this, my big AI ambition is to start amazing applied projects like that machine translation project. Good R&D endeavors can do more for progress in fundamental technologies than all the fancy theorizing that we consider the “real” AI research.

That’s all! Very curious to hear more about your educational AI projects (I heard some rumors from Harm de Vries :)).

Cheers,

Dima

Here is some context to the history:

In this email, Dzmitry Bahdanau reflects on his role in an influential breakthrough in AI: the invention of the attention mechanism. This concept, which allows AI models to focus on the most relevant parts of their input, is now central to all LLMs like ChatGPT.

Dzmitry recounts how, during an internship in Yoshua Bengio’s lab in 2014, he tackled a critical challenge in machine translation: how to overcome the bottleneck of compressing an entire sentence into a single fixed representation before generating a translation. Inspired by how human translators shift their focus between source and target sentences, he developed a mechanism that let the model dynamically “attend” to specific words in the input as it generated each word of the output.

This simple yet powerful idea—assigning attention weights to different parts of the input—proved transformative. It not only improved translation quality but also paved the way for attention to be used across AI research, from natural language processing to computer vision. It’s an approach so fundamental that modern AI models like Transformers are built entirely around it.

What makes this email special is its candid portrayal of innovation: the skepticism, the trial and error, and the collaborative energy of a fast-moving field. It’s also a reminder of how quickly ideas evolve—from Dzmitry’s initial implementation of attention on a single GPU to today’s world-changing applications of AI.

This story highlights the interplay between human creativity and technological progress, as well as the enduring power of good ideas in shaping the history.